How to choose the right AI tech stacks for businesses?

May 21, 2025

As AI becomes a cornerstone of digital transformation, more businesses are moving from pilot projects to full-scale deployments. According to Gartner, over 80% of enterprises will have adopted AI tech stack components by 2025 to accelerate innovation and gain a competitive edge. But what truly powers these breakthroughs? It’s not just algorithms, it’s the strategic selection of the right AI software stack, including best AI tools, AI development frameworks, and the underlying AI machine learning stack that enables scalable, intelligent solutions across sectors.

Choosing the right AI tech stack for business is becoming a necessity, whether you’re a startup exploring the best AI frameworks for startups, a SaaS company needing robust AI infrastructure for SaaS businesses, or an enterprise aiming for scalable AI tools for enterprise solutions, your choices today will impact performance, cost-efficiency, and innovation tomorrow.

This guide unpacks everything from open-source vs proprietary AI tools, essential artificial intelligence platforms, to the future of cloud-based AI development platforms, so you can build smart, sustainable, and strategic AI solutions.

What AI Will Bring in 2025 and Beyond

Artificial Intelligence is no longer just about automation, it’s becoming the brain of the digital enterprise. In 2025 and beyond, businesses will witness transformative AI capabilities that go far beyond process efficiency:

- Hyper-personalized customer interactions powered by real-time insights

- Autonomous AI-powered decision-making engines for dynamic business logic

- Widespread use of cloud-based AI development platforms for scalable innovation

- Dependable AI infrastructure for SaaS businesses, enabling seamless integration

- Intelligent edge computing with smart devices processing data on the fly

These advancements call for more than basic toolkits, they demand an integrated ecosystem built on a dependable machine learning stack and advanced deep learning tools to deliver speed, precision, and strategic growth.

Data in the AI Stack:

Data is the lifeblood of any AI initiative. Your AI tech stack must include robust solutions for handling data throughout its lifecycle:

Gathering data from various sources, which can include databases, APIs, sensors, and user interactions.

Choosing scalable and efficient storage solutions capable of handling the volume and variety of data required for AI.

Utilizing tools and techniques to clean, transform, and prepare data for model training, ensuring quality and consistency. Frameworks like Apache Spark and Pandas are crucial here.

Employing tools for understanding data patterns, identifying relationships, and visualizing insights, often using libraries like Matplotlib and Seaborn in Python.

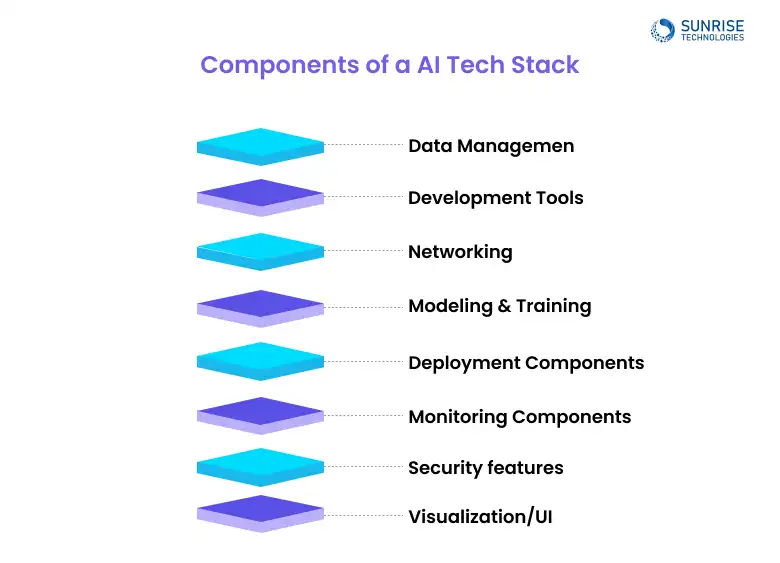

Components of a AI Tech Stack

Building an effective AI system requires a multi-layered architecture. Each layer in the modern AI tech stack plays a specialized role in data processing, model training, deployment, and monitoring.

| Component | Functionality | Key Tools & Technologies |

|---|---|---|

| Data Management | Ingestion, storage, preprocessing, governance | Apache Kafka, Airflow, AWS S3, Delta Lake, BigQuery |

| Development Tools | Code management, experimentation, collaboration | Jupyter, Git, VS Code, Weights & Biases, DVC |

| Networking | Connectivity across services, edge devices, cloud | gRPC, REST APIs, VPNs, Load Balancers, 5G/Edge networking |

| Modeling & Training | ML/DL algorithm development and training | TensorFlow, PyTorch, Scikit-learn, XGBoost, Hugging Face |

| Deployment Components | Serving models, CI/CD, scaling | Docker, Kubernetes, FastAPI, Triton Inference Server |

| Monitoring Components | Model health, data drift, performance tracking | MLflow, Prometheus, Grafana, Evidently AI |

| Security features | Identity, access management, data privacy & model protection | IAM, SSL, OAuth2, Vault, Alibi Detect |

| Visualization/UI | Dashboards, interpretability, interactive applications | Streamlit, Dash, React, Gradio, Kibana |

At the foundation of every AI architecture lies data management. This component ensures that data is collected from diverse sources, processed efficiently, stored securely, and governed with strict compliance standards. Platforms like Apache Kafka and Delta Lake power real-time ingestion and transformation, while BigQuery and AWS S3 serve as reliable backbones for analytics and storage.

Development tools for AI empower data scientists and engineers to prototype, experiment, and collaborate in real-time. Tools like Jupyter Notebooks, VS Code, and Weights & Biases make it easier to version code, track experiments, and visualize outcomes.

The networking layer in AI architectures ensures seamless communication between services, models, data pipelines, and even edge devices. Leveraging technologies like RESTful APIs, gRPC, and VPNs allows for secure data transmission and efficient API orchestration. As AI moves to the edge, 5G and Edge Networking become crucial for low-latency, real-time inferencing and control.

The core of intelligence lies in the modeling and training components. Here, machine learning frameworks such as TensorFlow, PyTorch, and Scikit-learn enable businesses to design, train, and optimize algorithms. Libraries like Hugging Face Transformers accelerate the development of NLP models, while XGBoost remains a go-to for high-performance structured data modeling.

Once models are trained, they must be deployed in a scalable and maintainable way. Deployment components include containers (Docker), orchestrators (Kubernetes), and APIs (FastAPI, Triton Inference Server) that serve the models. This layer ensures that models are accessible in real-time, autoscale with traffic, and remain decoupled from the core application logic for flexibility.

Post-deployment, continuous oversight is essential. Monitoring components for AI track model health, accuracy, latency, and detect data drift or concept drift in production. Tools like MLflow, Prometheus, and Grafana provide observability dashboards, while Evidently AI offers insights into data and model changes, enabling fast response to degradation and anomalies.

As AI systems grow in complexity, so does their attack surface. The security layer ensures that data, APIs, and models are protected against unauthorized access and breaches. Solutions like OAuth2, SSL, IAM, and HashiCorp Vault provide robust identity management and encryption, while tools like Alibi Detect ensure model integrity through adversarial detection.

Insights need to be understandable to stakeholders. The UI and visualization layer converts complex model outputs into intuitive dashboards and apps. Tools such as Streamlit, Gradio, Dash, and React make it easier to build interpretable, real-time interfaces that showcase AI results and help non-technical users make data-driven decisions.

Start exploring the perfect AI stack today and unlock your company’s potential. Let’s make it happen.

AI Development Frameworks

AI development frameworks are the core tools used to design, train, test, and deploy intelligent models at scale across various domains. These frameworks for AI model development offer flexibility, modularity, and high-performance computing capabilities that streamline the entire machine learning lifecycle.

A powerful open-source deep learning framework developed by Google, ideal for production-grade model training and deployment, especially for AI applications in computer vision and NLP.

A flexible, Pythonic deep learning framework from Meta, widely used for research and fast prototyping of deep learning algorithms with dynamic computation graphs.

The go-to ML library for classical machine learning models, offering tools for regression, classification, clustering, and dimensionality reduction.

A high-level neural network API running on top of TensorFlow, designed for rapid experimentation with deep neural networks.

An essential framework for NLP model development, offering pre-trained transformer-based models such as BERT, GPT, and T5.

A high-performance gradient boosting library perfect for structured data modeling and known for winning numerous machine learning competitions.

An open format to represent machine learning models that enables interoperability between AI frameworks, ideal for moving models between platforms like TensorFlow and PyTorch.

Comparison of Top AI Development Frameworks

| Feature | TensorFlow | PyTorch | Keras |

|---|---|---|---|

| Development | Developed by Google | Developed by Facebook AI Research (FAIR) | Developed by François Chollet |

| Type | Deep Learning Framework | Deep Learning Framework | High-level Neural Networks API |

| Graph Execution | Static Computational Graph | Dynamic Computational Graph | Static Graph (via TensorFlow backend) |

| Ease of Use | Complex but production-optimized | Intuitive, research-friendly | Beginner-friendly and compact |

| Deployment Support | TF Serving, TF Lite, TensorFlow.js | TorchServe, ONNX | Tied to TensorFlow’s deployment tools |

| Community Support | Large global community, Google support | Active open-source contributions | Integrated within TensorFlow ecosystem |

| Use Cases | Enterprise ML, mobile, cloud AI | Academic research, experimental projects | Fast prototyping |

| Model Hub Availability | TensorFlow Hub | PyTorch Hub | Integrated via TensorFlow Hub |

| Pre-trained Model Support | Wide range of pre-trained models | Strong pre-trained support | Moderate (depends on TensorFlow backend) |

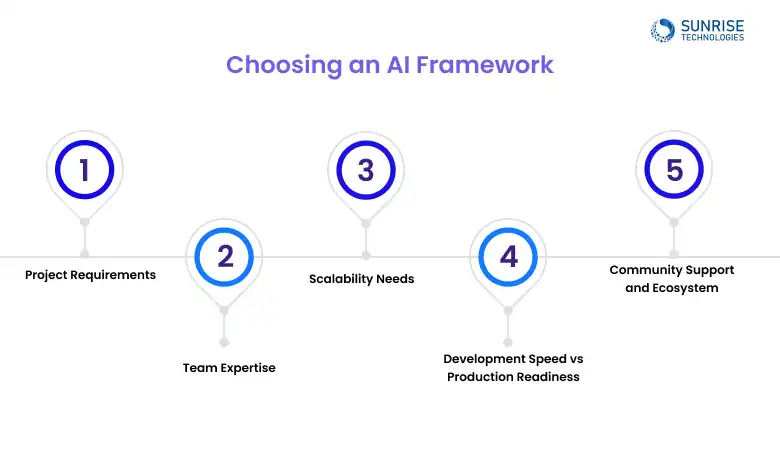

How to Choose the Right AI Framework

The choice of AI frameworks for cloud-based applications versus scalables often depends on factors like:

- Project Requirements: The complexity of your AI models (e.g., deep learning vs. classical ML), the need for GPU acceleration, and deployment requirements will influence your choice.

- Team Expertise: Consider the existing skills and familiarity of your team with different frameworks.

- Scalability Needs: For scalable AI tools for enterprise solutions, frameworks with strong support for distributed computing (like TensorFlow and PyTorch) are often preferred.

- Development Speed vs. Production Readiness: PyTorch is often favored for research and rapid prototyping, while TensorFlow has strong production deployment capabilities.

- Community Support and Ecosystem: A large and active community provides better resources, documentation, and support.

Whether you’re a startup or an enterprise, we have the solution to fuel your business growth.

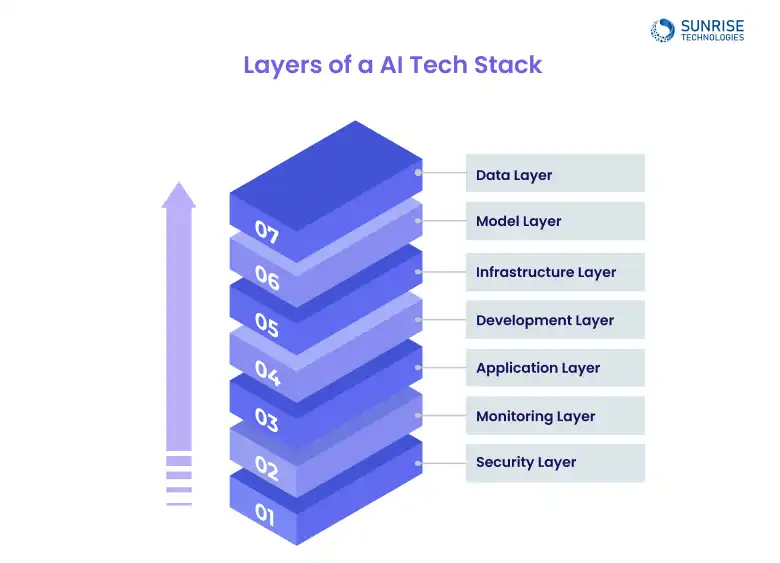

Layers of a AI Tech Stack

A modern AI tech stack is built on multi-layered AI architecture that integrates data, models, infrastructure, and real-time applications to power intelligent automation. These AI technology stack layers form the backbone of scalable, production-grade artificial intelligence systems.

The data layer in AI tech stack is the foundational stage where structured, unstructured, and streaming data is captured, cleansed, and made analytics-ready. It enables seamless real-time data ingestion and scalable storage for downstream AI operations.

- Manages data ingestion from diverse sources (IoT, apps, databases)

- Enables batch and streaming processing (Kafka, Apache Spark)

- Supports scalable cloud storage and data lakes

- Tools: Apache Kafka, Delta Lake, BigQuery, AWS S3, Apache Airflow

The model layer in AI tech stack focuses on training and optimizing machine learning and deep learning models using advanced algorithms and frameworks tailored to business use cases.

- Develops and trains models using ML/DL libraries

- Enables model optimization, hyperparameter tuning, and transfer learning

- Includes pre-trained and custom model capabilities

- Tools: TensorFlow, PyTorch, Hugging Face, Scikit-learn, XGBoost

The AI infrastructure layer ensures scalable, high-performance computing through container orchestration and hardware acceleration for AI workloads across cloud, on-prem, or hybrid environments.

- Offers GPU/TPU-based computation and multi-node training

- Supports containerization and CI/CD for model delivery

- Enables multi-cloud and hybrid AI environment scalability

- Tools: Kubernetes, Docker, NVIDIA CUDA, AWS, Azure

The development layer for AI projects provides an environment for experimentation, versioning, debugging, and collaborative model lifecycle management using IDEs, notebooks, and MLOps tools.

- Facilitates model prototyping and debugging

- Supports experiment tracking, code versioning, and CI pipelines

- Promotes reproducibility and collaboration

- Tools: Jupyter Notebooks, Git, VS Code, Weights & Biases, DVC

The AI application layer enables real-time inferencing by integrating trained models into applications, microservices, or APIs that interact with business systems and user interfaces.

- Deploys models as RESTful APIs or containerized services

- Integrates models into front-end apps or internal tools

- Enables seamless scalability and business logic integration

- Tools: FastAPI, Flask, Triton Inference Server, Streamlit

The AI monitoring layer maintains model performance and system reliability by detecting drift, tracking metrics, and issuing alerts for retraining or tuning.

- Monitors inference latency, accuracy, and resource usage

- Detects concept drift and anomalous data shifts

- Automates retraining alerts and performance degradation responses

- Tools: MLflow, Grafana, Prometheus, Evidently AI

The AI security layer fortifies the entire pipeline by protecting models, APIs, and data with encryption, identity access controls, and adversarial defense strategies.

- Ensures data privacy and role-based access control (RBAC)

- Implements model integrity verification and threat detection

- Complies with regulatory and governance frameworks

- Tools: OAuth2, SSL/TLS, IAM, Vault, Alibi Detect

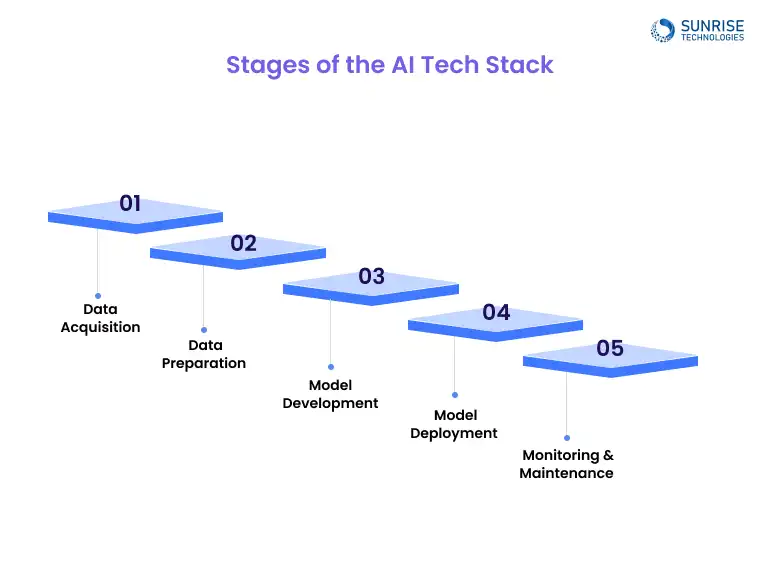

Stages of the AI Tech Stack

Understanding the stages of AI tech stack helps enterprises design end-to-end AI systems—from raw data to real-world impact. Each stage plays a vital role in developing, deploying, and maintaining intelligent applications that scale.

This is the very first step, akin to sourcing the bricks, wood, and other materials for your house. Data acquisition involves collecting the raw data that will fuel your AI models. This data can come from various sources, such as:

- Internal Databases: Customer data, sales records, operational logs.

- External APIs: Social media data, weather information, financial market feeds.

- Sensors and IoT Devices: Real-time data from industrial equipment, environmental monitors.

- Web Scraping: Extracting publicly available data from websites.

The key here is to gather relevant and high-quality data that aligns with your AI goals. The tools and technologies used in this stage might include data connectors, API clients, and web scraping libraries.

Once you have your raw materials, you need to prepare them for construction. Data preparation involves cleaning, transforming, and structuring the acquired data into a format suitable for training AI models. This crucial stage includes:

- Data Cleaning: Handle missing values, remove outliers, and fix inconsistencies (e.g., Pandas)

- Data Transformation: Scale, encode, or reshape data to fit ML models (e.g., Scikit-learn)

- Feature Engineering: Derive useful features to boost performance

- Data Labeling: Tag data for supervised learning tasks

This stage is often the most time-consuming but is critical for building accurate and reliable AI models.

This is where the actual “intelligence” is built, like constructing the core structure of your house. Model development involves selecting appropriate AI algorithms, training them on the prepared data, and fine-tuning their parameters to achieve the desired performance. Key aspects include:

- Algorithm Selection: Choose ML/DL algorithm based on problem type

- Model Training: Fit data to model using training techniques (leveraging GPUs/cloud)

- Hyperparameter Tuning: Fine-tune for optimal performance

- Model Evaluation: Validate model with metrics like accuracy, F1 score

Once your AI model is trained and evaluated, you need to make it accessible for real-world use, like making your house livable. Model deployment involves integrating the trained model into your existing applications or systems. This can involve:

- API Development: Serve predictions via Flask/FastAPI endpoints

- Cloud Deployment: Host models on AWS/GCP/Azure for scale

- Edge Deployment: Run models on IoT/edge for low-latency use

- Containerization: Package models using Docker for portability

Just like a house requires regular maintenance, AI models need ongoing monitoring and updates to ensure they continue to perform effectively over time. Monitoring and maintenance involves:

- Performance Monitoring: Track metrics like accuracy and latency (e.g., Prometheus)

- Data Drift Detection: Spot input data changes affecting results

- Model Retraining: Periodically refresh models with new data

- Model Versioning: Manage iterations and lineage (e.g., MLflow)

- Issue Resolution: Detect and fix errors in production models

How to Choose right AI Tech Stack for Business

When it comes to selecting the right AI stack, it’s not just about picking the best frameworks. People are always asking things like, “How do I choose the best AI tools for my business?” or “Which AI framework is best for a SaaS startup?” These are the kinds of big-picture questions that should guide your choices.

Are you looking to optimize operations, personalize user experiences, or enable AI stack for predictive analytics? Your AI goals should directly influence the stack selection.

Evaluate all financial aspects including software, infrastructure, cloud services, and team expertise to ensure a budget-conscious AI strategy.

Audit your data variety, volume, and velocity. Choose tools that align with your data pipelines real-time, batch, or hybrid.

Match each AI stack layer (data, model, infrastructure, etc.) to your use case. Go modular. Avoid one-size-fits-all platforms unless they offer flexibility.

Run pilot projects to validate the chosen stack’s performance, ease of integration, and ROI. Benchmark results against business KPIs.

Ensure your stack scales with your ambitions from MVP to enterprise-grade deployments. Opt for tools with community backing and cloud-native compatibility.

For regulated industries (like healthcare, fintech), ensure built-in compliance, data encryption, and access control mechanisms.

Don’t risk it with your AI choices, opt for tech stacks that align with your goals. Talk to our specialists and make informed decisions that scale.

What is MLOps in the AI Tech Stack?

MLOps in AI Tech Stack refers to the set of practices and tools that aim to streamline and automate the entire lifecycle of machine learning models, from development to deployment and ongoing maintenance in production. It bridges the gap between data science and IT operations, ensuring that AI models are reliable, scalable, and deliver continuous value.

- Model Versioning and Tracking: Keeping track of different versions of models and their performance.

- Automated Deployment Pipelines: Streamlining the process of deploying trained models into production.

- Continuous Integration and Continuous Delivery (CI/CD) for ML: Automating the testing and deployment of model updates.

- Model Monitoring and Logging: Tracking model performance in real-time and identifying potential issues.

- Data and Feature Engineering Pipelines: Automating the process of preparing and transforming data for model training and inference..

MLOps Platforms and Tools Powering the AI/ML Industry

| MLOps Platform/Tool | Description | Use Case |

|---|---|---|

| Kubeflow | Open-source ML toolkit for Kubernetes | End-to-end ML pipeline orchestration |

| MLflow | Open-source platform for managing the ML lifecycle | Model management and tracking |

| TensorFlow Extended (TFX) | TensorFlow-based ML production pipeline | Production ML pipeline for TensorFlow models |

| Seldon | Open-source platform for deploying ML models at scale | Real-time model deployment and monitoring |

| DataRobot | Enterprise AI platform for automated machine learning | Automated model building and deployment |

| Amazon SageMaker | AWS service for building, training, and deploying ML models | Scalable, fully managed ML solutions |

| Azure ML | Microsoft Azure platform for managing ML models | End-to-end model management on Azure |

| MLflow | Open-source platform for managing the ML lifecycle | Model management and tracking |

| ClearML | End-to-end MLOps platform | Model tracking and collaboration |

| DVC (Data Version Control) | Version control for machine learning projects | Version control for data and models |

| Weights & Biases | Platform for tracking and visualizing ML experiments | Model training tracking and visualization |

How to Optimize Your Existing AI Tech Stack

If your AI tech stack is already up and running, why not make it even better? Here are some tips on how to integrate AI frameworks into existing systems for maximum impact.

- 1. Identify Bottlenecks: Analyze current workflows to locate delays or inefficiencies caused by tool or process limitations.

- 2. Evaluate Cloud Costs: Review cloud spending and optimize resource usage. Leverage serverless computing or auto-scaling features.

- 3. Adopt MLOps Practices: Integrate MLOps tools to automate training, deployment, and monitoring workflows for efficiency and reliability.

- 4. Regularly Review and Upgrade: Stay updated on newer versions of AI frameworks and libraries for performance and security benefits.

- 5. Consolidate Tools: Eliminate redundancies by combining overlapping tools to simplify management and reduce licensing costs.

- 6. Invest in Training: Equip teams with upskilling programs to enhance effective usage and fine-tuning of your AI stack.

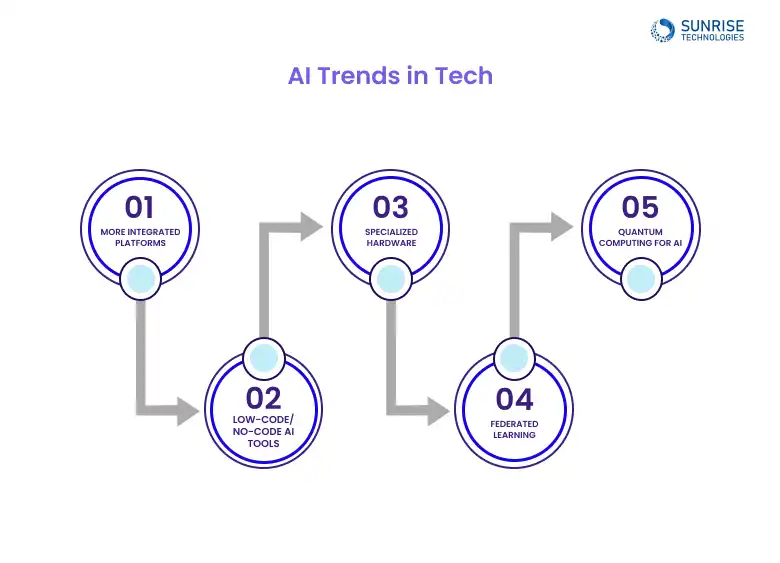

Future Trends of AI in Tech Stack

As AI continues to evolve, the tech stack supporting AI applications will also undergo significant transformation. The AI tech stack landscape is constantly evolving. Expect to see:

Cloud providers are offering increasingly integrated AI platforms that simplify the development and deployment process.

These platforms will empower more business users to build and deploy simple AI applications without extensive coding knowledge.

The development of more efficient and specialized hardware (beyond GPUs and TPUs) for specific AI tasks.

Techniques that allow training AI models on decentralized data without sharing sensitive information.

While still in its early stages, quantum computing has the potential to revolutionize certain AI tasks.

How Sunrise Technologies Can Help You Choose the Right AI Tech Stack

Selecting the right AI tech stack can be a little hectic for your business, but with so many options available, making the best choice can feel overwhelming. At Sunrise Technologies, we simplify this process by guiding you through the complexities of AI technologies and helping you build a custom and affordable AI application development that is aligned with your goals.

- 1. Tailored AI Solutions for Your Business

- 2. Expertise Across All Layers of AI Tech Stacks

- 3. Optimized for Scalability and Performance

- 4. Cost-Effective AI Solutions

- 5. End-to-End AI Lifecycle Management

- 6. Staying Ahead with Emerging Trends

- 7. Holistic Approach to Data Privacy and Security

- 8. Seamless Integration with Existing Tech Ecosystem

Final thoughts:

In 2025 and beyond, businesses that harness the right AI tech stack won’t just compete, they’ll lead. From data ingestion to model deployment and monitoring, each layer of your stack must align with your operational goals and industry-specific demands. For startups experimenting with AI prototypes or enterprises optimizing real-time analytics, leveraging the best AI frameworks for startups and scalable AI tools for enterprise solutions, like TensorFlow, Kubernetes, and FastAPI is the key to achieving impactful results.

Partnering with a trusted expert like Sunrise Technologies, a top AI software development company, ensures you get more than just cutting-edge solutions, you get strategy, scalability, and support. We provide affordable AI app development services designed to help you innovate faster, deploy smarter, and scale securely. Let’s build your intelligent tomorrow, today.

Let’s evaluate your options and optimize your business’s AI setup.

An AI tech stack is the collection of tools, frameworks, and infrastructure used to develop, deploy, and manage AI solutions. It includes layers such as data processing, model training, infrastructure, APIs, security, and monitoring.

The right AI tech stack ensures scalability, performance, security, and seamless integration into business workflows. It helps reduce development time, optimize resources, and deliver high-performing AI applications.

Top frameworks for startups include:

- TensorFlow: Versatile, production-ready

- PyTorch: Research-friendly, dynamic

- Keras: Easy-to-use for quick prototyping

- Hugging Face Transformers: Leading NLP framework

- Data Layer: Apache Kafka, AWS S3

- Model Layer: TensorFlow, PyTorch

- Infrastructure: Kubernetes, Docker, AWS

- Deployment: FastAPI, Flask

- Monitoring: Prometheus, Grafana

Some challenges include:

- Handling unstructured and large datasets

- Ensuring model reliability in production

- Managing real-time inference at the edge

- Integrating multiple tools smoothly

- Meeting compliance and security requirements

To optimize your AI tech stack:

- Identify bottlenecks in workflows

- Optimize cloud resource usage and costs

- Implement automated MLOps pipelines

- Consolidate redundant tools

- Regularly update libraries and frameworks

Sam is a chartered professional engineer with over 15 years of extensive experience in the software technology space. Over the years, Sam has held the position of Chief Technology Consultant for tech companies both in Australia and abroad before establishing his own software consulting firm in Sydney, Australia. In his current role, he manages a large team of developers and engineers across Australia and internationally, dedicated to delivering the best in software technology.